- Joined

- Jul 20, 2012

- Messages

- 31

- Reaction score

- 1

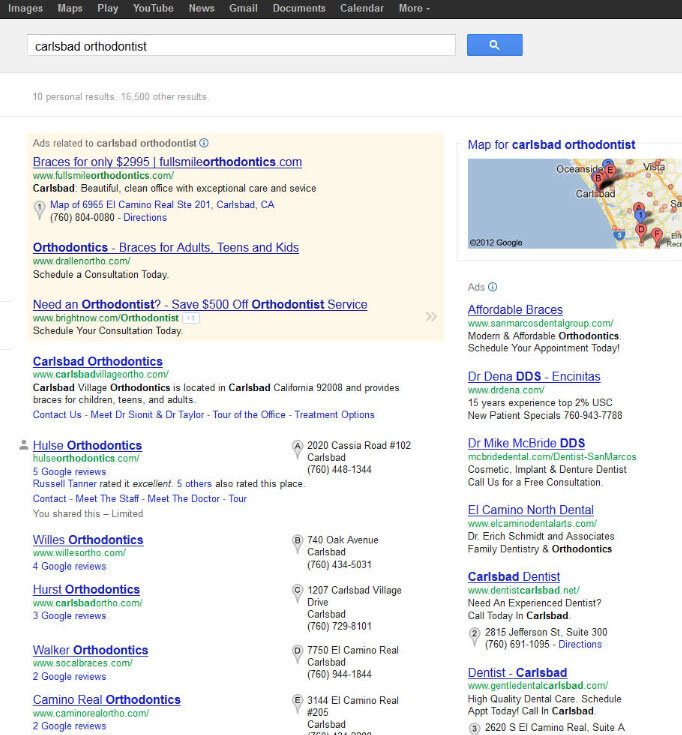

My company works specifically in the dental/orthodontic realm - which is admittedly more scrutinized than many industries in terms of Google reviews.

We've been chasing our tails to find a rhyme or reason as to why some reviews make it through while others don't. In general terms: company (orthodontist) asks client (patient) to consider leaving a review. Client (patient) goes home and does such. It seems to be very rare that these posts actually make it "live." Many posts here and on the Google forum have suggested that:

So we began to recruit actual patients of a few client orthodontists to perform a few tests as follows (each group is 2 people, we had 1 per group per week attempt to review over 5 weeks):

My takeaway is that some pages must themselves have higher "trust value" with Google than others. So what the @$#& can we do to figure out what facet of a page makes it less trustworthy than another?

We've been chasing our tails to find a rhyme or reason as to why some reviews make it through while others don't. In general terms: company (orthodontist) asks client (patient) to consider leaving a review. Client (patient) goes home and does such. It seems to be very rare that these posts actually make it "live." Many posts here and on the Google forum have suggested that:

- someone with a history of reviews is more likely to have his/her review for said company (orthodontist) go live.

- using a smartphone with the Google+ app again improves likelihood

- There is some form of linguistic filter that is tripped when certain phrases are made - or posts are too similar in nature.

So we began to recruit actual patients of a few client orthodontists to perform a few tests as follows (each group is 2 people, we had 1 per group per week attempt to review over 5 weeks):

Patient Group 1 actively uses Google+ on home computer

Patient Group 2 actively uses Google+ on smartphone

Patient Group 3 is new to Google, uses home computer

Patient Group 4 is new to Google, uses smartphone

We found very little discrepancy between each group as to what % of their posts went "live." Each group was successful for the given orthodontic practice 0 out of 2 times. Ironically, group 4 (new, smartphone) had 1 out of 2.Patient Group 2 actively uses Google+ on smartphone

Patient Group 3 is new to Google, uses home computer

Patient Group 4 is new to Google, uses smartphone

So we altered the experiment and asked two of the patients (one from group 2, one from group 4) that were unsuccessful posting for our client to make the EXACT same post for another practice 30 miles away in the same week (not a competitor and a friend of our client). Both of the reviews went live.

My takeaway is that some pages must themselves have higher "trust value" with Google than others. So what the @$#& can we do to figure out what facet of a page makes it less trustworthy than another?