KieranThomas

Member

- Joined

- Sep 30, 2021

- Messages

- 56

- Reaction score

- 14

Hi all,

I can't seem to find a solution to this particular issue so wondered if you awesome bunch have any ideas!

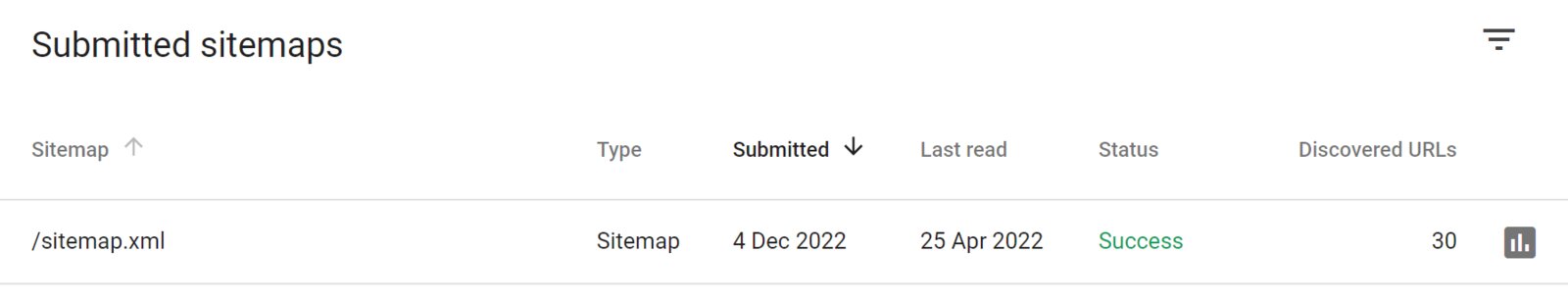

For one of our clients (and it only seem to impact this client), GSC is saying that the sitemap.xml file hasn't been crawled since 25th April 2022.

So far I've tried the following without success:

1) Ran it through several validators and non flag any issues. It passes as valid

2) Have used the GSC "Inspect" tool, and that also confirms that Google can access it OK

3) Have tried deleting it (several times) and resubmitting and leaving it for a while for Google to crawl. No joy

Interestingly, we also have another odd issue with this client that we have for no other clients so I'd be curious to know if it could be related....

We often yet a "Quota exceeded" error message even if we haven't tried to submit any URLs that day. Very occasionally Google will allow us to submit 1-2 URLs but then the error starts to appear again and can last for weeks.

The quality of the site is OK as far as we're aware in that there are no security issues or manual actions.

Some of the content is "thin", which we're trying to improve but with some resistance from the client. However, that shouldn't be why Google isn't crawling the sitemap.

Any ideas on how we can get Google to crawl the sitemap?)

I can't seem to find a solution to this particular issue so wondered if you awesome bunch have any ideas!

For one of our clients (and it only seem to impact this client), GSC is saying that the sitemap.xml file hasn't been crawled since 25th April 2022.

So far I've tried the following without success:

1) Ran it through several validators and non flag any issues. It passes as valid

2) Have used the GSC "Inspect" tool, and that also confirms that Google can access it OK

3) Have tried deleting it (several times) and resubmitting and leaving it for a while for Google to crawl. No joy

Interestingly, we also have another odd issue with this client that we have for no other clients so I'd be curious to know if it could be related....

We often yet a "Quota exceeded" error message even if we haven't tried to submit any URLs that day. Very occasionally Google will allow us to submit 1-2 URLs but then the error starts to appear again and can last for weeks.

The quality of the site is OK as far as we're aware in that there are no security issues or manual actions.

Some of the content is "thin", which we're trying to improve but with some resistance from the client. However, that shouldn't be why Google isn't crawling the sitemap.

Any ideas on how we can get Google to crawl the sitemap?)

Last edited: